It is our pleasure to announce that the NitroPC Pro 2 is officially certified for Qubes OS Release 4!

The NitroPC Pro 2: a secure, powerful workstation

The NitroPC Pro 2 is a workstation for high security and performance requirements. The open-source Dasharo coreboot firmware ensures high transparency and security while avoiding backdoors and security holes in the firmware. The device is certified for compatibility with Qubes OS 4 by the Qubes developers. Carefully selected components ensure high performance, stability, and durability. The Dasharo Entry Subscription guarantees continuous firmware development and fast firmware updates.

Here’s a summary of the main component options available for this mid-tower desktop PC:

| Component | Options |

|---|---|

| Motherboard | MSI PRO Z790-P DDR5 (Wi-Fi optional) |

| Processor | 14th Generation Intel Core i5-14600K or i9-14900K |

| Memory | 16 GB to 128 GB DDR5 |

| NVMe storage (optional) | Up to two NVMe PCIe 4.0 x4 SSDs, up to 2 TB each |

| SATA storage (optional) | Up to two SATA SSDs, up to 7.68 TB each |

| Wireless (optional) | Wi-Fi 6E, 2400 Mbps, 802.11/a/b/g/n/ac/ax, Bluetooth 5.2 |

| Operating system (optional) | Qubes OS 4.2 or Ubuntu 22.04 LTS |

Of special note for Qubes users, the NitroPC Pro 2 features a combined PS/2 port that supports both a PS/2 keyboard and a PS/2 mouse simultaneously with a Y-cable (not included). This allows for full control of dom0 without the need for USB keyboard or mouse passthrough. Nitrokey also offers a special tamper-evident shipping method for an additional fee. With this option, the case screws will be individually sealed and photographed, and the NitroPC Pro 2 will be packed inside a sealed bag. Photographs of the seals will be sent to you by email, which you can use to determine whether the case was opened during transit.

Important note: When configuring your NitroPC Pro 2 on the Nitrokey website, there is an option for a discrete graphics card (e.g., Nvidia GeForce RTX 4070 or 4090) in addition to integrated graphics (e.g., Intel UHD 770, which is always included because it is physically built into the CPU). NitroPC Pro 2 configurations that include discrete graphics cards are not Qubes-certified. The only NitroPC Pro 2 configurations that are Qubes-certified are those that contain only integrated graphics.

The NitroPC Pro 2 also comes with a “Dasharo Entry Subscription,” which includes the following:

- Accesses to the latest firmware releases

- Exclusive newsletter

- Special updates, including early access to updates enhancing privacy, security, performance, and compatibility

- Early access to new firmware releases for newly-supported desktop platforms (please see the roadmap)

- Access to the Dasharo Premier Support invite-only live chat channel on the Matrix network, allowing direct access to the Dasharo Team and fellow subscribers with personalized and priority assistance

- Insider’s view and influence on the Dasharo feature roadmap for a real impact on Dasharo development

- Dasharo Tools Suite Entry Subscription keys

For further product details, please see the official NitroPC Pro 2 page.

Special note regarding the need for kernel-latest on Qubes 4.1

Beginning with Qubes OS 4.1.2, the Qubes installer includes the kernel-latest package and allows users to select this kernel option from the GRUB menu when booting the installer. At the time of this announcement, kernel-latest is required for the NitroPC Pro’s graphics drivers to function properly on Qubes OS 4.1. It is not required on Qubes OS 4.2. Therefore, potential purchasers and users of this model who intend to run Qubes 4.1 should be aware that they will have to select a non-default option (Install Qubes OS RX using kernel-latest) from the GRUB menu when booting the installer. However, since Linux 6.1 has officially been promoted to being a long-term support (LTS) kernel, it will become the default kernel at some point, which means that the need for this non-default selection is only temporary.

About Nitrokey

Nitrokey is a world-leading company in open-source security hardware. Nitrokey develops IT security hardware for data encryption, key management and user authentication, as well as secure network devices, PCs, laptops, and smartphones. The company was founded in Berlin, Germany in 2015 and already counts tens of thousands of users from more than 120 countries, including numerous well-known international enterprises from various industries, among its customers. Learn more.

About Dasharo

“Dasharo is an open-source firmware distribution focusing on seamless deployment, clean and simple code, long-term maintenance, professional support, transparent validation, superior documentation, privacy-respecting implementation, liberty for the owners and trustworthiness for all.” —the Dasharo documentation

Dasharo is a registered trademark of and a product developed by 3mdeb.

What is Qubes-certified hardware?

Qubes-certified hardware is hardware that has been certified by the Qubes developers as compatible with a specific major release of Qubes OS. All Qubes-certified devices are available for purchase with Qubes OS preinstalled. Beginning with Qubes 4.0, in order to achieve certification, the hardware must satisfy a rigorous set of [requirements], and the vendor must commit to offering customers the very same configuration (same motherboard, same screen, same BIOS version, same Wi-Fi module, etc.) for at least one year.

Qubes-certified computers are specific models that are regularly tested by the Qubes developers to ensure compatibility with all of Qubes’ features. The developers test all new major versions and updates to ensure that no regressions are introduced.

It is important to note, however, that Qubes hardware certification certifies only that a particular hardware configuration is supported by Qubes. The Qubes OS Project takes no responsibility for any vendor’s manufacturing, shipping, payment, or other practices, nor can we control whether physical hardware is modified (whether maliciously or otherwise) en route to the user.

Similarly, I often see fakes appear shortly after a major event.

Last Saturday (Oct 14), we had a great solar eclipse pass over North and South America. This was followed by some incredible photos -- some real, some not.

I tried to capture a photo of the eclipse by holding my special lens filter over my smartphone's camera. Unfortunately, my camera decoded to automatically switch into extended shutter mode. As a result, the Sun is completely washed out. However, the bokeh (small reflections made by the lens) clearly show the eclipse.

I showed my this photo to a friend, and he one-upped me. He had tried the same thing and had a perfect "ring of fire" captured by the camera. Of course, I immediately noticed something odd. I said, "That's not from Fort Collins." I knew this because we were not in the path of totality. He laughed and said he was in New Mexico for the eclipse.

Ring of Truth

Following the eclipse, FotoForensics has received many copies of the same viral image depicting the eclipse over a Mayan pyramid. Here's one example:The first time I saw this, I immediately knew it was fake. Among other things:

- The text above the picture has erasure marks. These appear as some black marks after the word "Eclipse" and below the word "day". Someone had poorly erased the old text and added new text.

- The Sun is never that large in the sky.

- If the Sun is behind the pyramid, then why is the front side lit up? Even the clouds show the sunlight on the the wrong side.

These include sightings from Instagam, LinkedIn, Facebook, TikTok, the service formally known as Twitter, and many more. Everyone shared the photo, and I could not find anybody who noticed that it was fake.

Ideally, we'd like to find the source image. This becomes the "smoking gun" piece of evidence that proves this eclipse photo is a fake. However without that, we can still use logic, reasoning, and other clues to conclusively determine that it is a forgery.

Looking Closely

Image forensics isn't just about looking at pixels and metadata. It's also about fact checking. And in this case, the facts don't line up. (The only legitimate "facts" in this instance is that (1) there is a Mayan pyramid at Chichén Itzá in Yucatán, Mexico, and (2) there was an eclipse on Saturday, October 14.)- The Moon's orbit around the Earth isn't circular; it's an ellipse. When a full moon happens at perigee (closest to the Earth), it looks larger and we call it a "super-moon". A full moon at apogee (furthest away) is a "mini-moon" because it looks smaller. Similarly, if an eclipse that happens when the Moon is really close to the Earth, then it blocks out almost all of the Sun. However, the Oct 14 eclipse happened when the Moon was further away. While the Moon blocked most of the Sun, it did not cover all of the Sun. Real photos of this eclipse show a thick ring of the Sun around the Moon, not a thin ring of the corona that is shown in this forgery.

- I went to the NASA web site, which shows the full path of the total eclipse. The path of totality for this eclipse did go through a small portion of Yucatán, but it did not go through Chichén Itzá. At best, a photo from Chichén Itzá should look more like my photo: a crescent of the eclipse.

- At Chichén Itzá, the partial eclipse happened at 11:25am - 11:30am (local time), so the Sun should be almost completely overhead. In the forgery, the Sun is at the wrong angle. (See Sky and Telescope's interactive sky chart. Set it for October 14, 2023 at 11:25am, and the coordinates should be 20' 40" N, 88' 34" W.)

- Google Maps has a great street-level view of the Mayan pyramid. The four sides are not the same. In particular, the steps on the South side are really eroded, but the North side is mostly intact. Given that the steps in the picture are not eroded, I believe this photo is facing South-East (showing the North and West side of the pyramid), but it's the wrong direction for the eclipse. (The eclipse should be due South by direction and very high in the sky.)

- Google Street View, as well as other recent photos, show a roped off area around the pyramid. (I assume it's to keep tourists from touching it.) The fencing is not present in this photo.

- The real pyramid at Chichén Itzá has a rectangular structure at the top. Three of the sides have one doorway each, while the North-facing side has three doorways (a big opening with two columns). In this forgery, we know it's not showing the South face because both stairways are intact. (As I mentioned, the South-facing stairwell is eroded.) However, the North face should have three doorways at the top. The visible sides in the photo have one doorway each, meaning that it can't be showing the North face. If it isn't showing the North side and isn't showing the South side, then it's not the correct building.

I can't rule out that the entire image may be computer generated or from some video game that I don't recognize. However, it could also be a photo from something like a museum diorama depicting what the pyramid may have looked like over a thousand years ago. (Those museum dioramas almost never have people standing on the miniature lawns.)

In any case, the eclipse was likely added after the pyramid photo was created.

Moon Shot

While I couldn't find the basis for this specific eclipse photo, I did see what people claim is a second photo of this same eclipse at the same Mayan pyramid. I found this version of it at Facebook, but it's also being virally spread across many different social media platforms.Now keep in mind, I've already debunked the size of the Sun, the totality of the eclipse, and the angle above the horizon. This picture also has the same problem with the wrong side of the pyramid being in shadow. Moreover, it contradicts the previous forgery: it shows the eclipse happening on the other side of the pyramid, no people, and different cloud coverage at the same time on the same day.

With this second forgery, I was able to find the source image. The smoking gun comes from a desktop wallpaper background that has been available since at least 2009:

In this case, someone started with the old desktop wallpaper image, gave it a red tint, added clouds, and inserted a fake solar eclipse.

Total Eclipse of the Art

It's easy enough to say "it's fake" and to back it up with a single claim (e.g., wrong shadows). However, if this were a court case or a legal claim, you'd want to list as many issues as possible. A single claim could be contested, but a variety of provable inconsistencies undermines any authenticity allegedly depicted by the photo.The same skills needed to track down forgeries like this are used for debunking fake news, identifying photo authenticity, and validating any kind of photographic claim. Critical thinking is essential when evaluating evidence. The outlandish claims around a photo should be grounded in reality and not eclipse the facts.

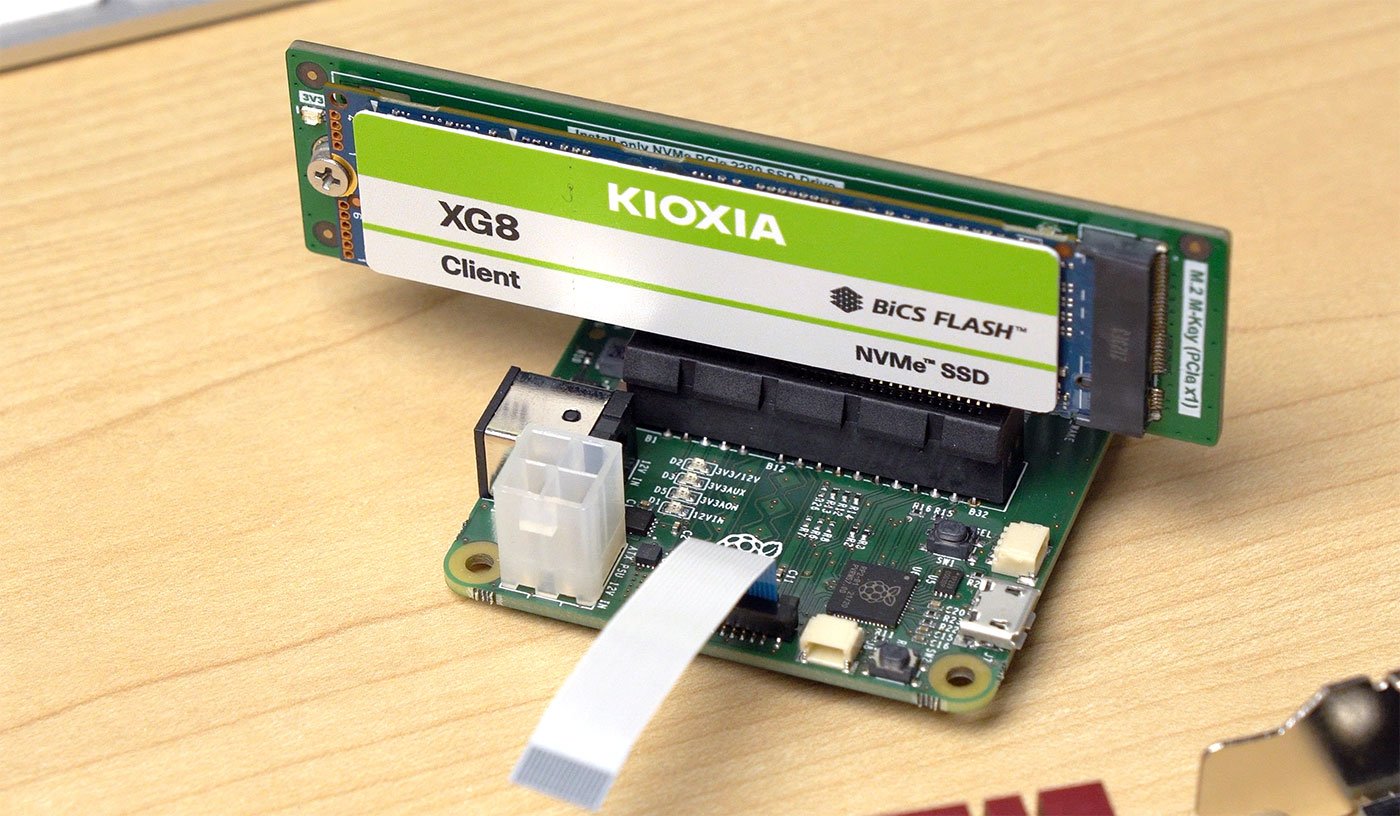

In my video about the brand new Raspberry Pi 5, I mentioned the new external PCIe port makes it possible to boot the standard Pi 5 model B directly off NVMe storage—an option which is much faster and more reliable than standard microSD storage (even with industrial-rated cards!).

Enabling NVMe boot is pretty easy, you add a line to /boot/config.txt, modify the BOOT_ORDER in the bootloader configuration, and reboot!

A few weeks back I was reminded that the source code to the 386MAX (later Qualitas MAX) memory manager was released in 2022 on github. Back in the 1990s I used primarily EMM386 and QEMM, but I have some experience with 386MAX as well. It’s quite comparable with QEMM in terms of features and performance.

The source code itself is quite interesting. The core memory manager is of course written 100% in assembler. However, it is very well commented assembly code, written in uniform style, and it generally gives the impression of a well maintained code base (unlike, say, MS-DOS).

The github release also includes enough binaries that one can experiment with 386MAX without building a monster code base.

I was able to get enough of it installed to have a functional 386MAX setup. Then I thought that for easier experimentation, perhaps I should set up a boot floppy with 386MAX. And that’s where trouble struck.

My boot floppy had a nasty tendency to horribly crash and burn (the technical term is ‘triple fault’) when loading additional drivers in some configurations. This is what the CONFIG.SYS looked like:

FILES=30

BUFFERS=10

DOS=HIGH,UMB

DEVICE=386MAX.SYS USE=E000-EFFF NOWIN3

DEVICEHIGH=POWER.EXE

DEVICEHIGH=VIDE-CDD.SYS /D:CD001

After some experimentation, I narrowed the problem to the following combination: 386MAX provides “high DOS memory” (RAM between 640K and 1M), DOS has UMB support enabled (via DOS=UMB), drivers are loaded into UMBs via DEVICEHIGH statement, and the system (VM really) has more than 16 MB RAM. The same configuration worked fine when loading from hard disk, or with 16 MB RAM (or less).

That gave me enough clues to suspect problems with DMA. In a setup using traditional IDE (no bus mastering), there’s no DMA involved. But when booting from a floppy, the data is transferred using DMA. If the DMA controller can’t reach memory because it’s beyond 16 MB, there will be trouble.

386MAX needs a DMA buffer to deal with situations when memory involved in a DMA transfer is paged, and the pages are not contiguous and/or are out of the DMA controller’s reach. But what if the DMA buffer itself is above 16 MB?

I already had a small utility to print information about a VDS (Virtual DMA Services) provider. It was trivial to add a function to print the physical address of the DMA buffer. There is typically one DMA buffer that can be allocated through VDS and it may also be used by the memory manager to deal with floppy DMA transfers. Sure enough, in a configuration with 32 MB RAM, 386MAX had a DMA buffer close to 32 MB! That would definitely not work with floppy transfers. But why?!

It was time to trawl through the source code. It was not hard to find the logic for allocating the DMA buffer. Because 386MAX supports old and exotic systems, the logic is fairly involved. The most unusual is an Intel Inboard 386 PC — a 386 CPU inside a PC or PC/XT system. In that case, the DMA buffer must be allocated below 1 MB (really below 640 KB). On a PC/AT compatible machine, it must lie below 16 MB. Except on old Deskpro 386 machines, the last 256 or so KB aren’t accessible through the DMA controller and the DMA buffer has to be placed a little below 16 MB. Finally on EISA systems, the 8237 compatible DMA controller can address the entire 32-bit space, and the DMA buffer can be anywhere.

But VirtualBox VMs don’t pretend to support EISA. Why would 386MAX think it’s running on an EISA system? Of course the source code had the answer. When 386MAX detects a PCI system (i.e. system with a PCI BIOS), it assumes EISA style DMA support!

Why oh Why?!

At first glance that makes no sense. But on closer look it does, sort of. Early PCI machines were generally Pentium or 486 systems with Intel chipsets. These were almost guaranteed to use the SIO or SIO.A southbridge (82378IB or 82378ZB SIO, or 82378AB SIO.A). And those chipsets did in fact include EISA DMA functionality with full 32-bit addressing!

In 1996, Intel released the 82371FB (PIIX) and 82371SB (PIIX3) southbridge. The PIIX/PIIX3 southbridge no longer included EISA DMA functionality and the 8237 compatible DMA controller could only address 16 MB RAM.

It is hard to be certain but the developement of 386MAX probably effectively stopped sometime around 1997. At that time, PCI machines were becoming common, but not necessarily with more than 16 MB RAM and running DOS. The problem may have slipped under the radar, especially because it probably in practice only affected booting with 386MAX from floppy.

Was it Always Wrong?

That said… I believe that placing the DMA buffer above 16 MB is always wrong in a system with ISA slots. On an EISA (or early PCI) system, the 8237 DMA controller can indeed address the entire 32-bit address space, and that takes care of floppy transfers. But there are also ISA bus-mastering adapters, such as some SCSI HBAs or network cards. And those cannot address more than 16 MB.

If a driver for an ISA bus-mastering adapter uses VDS to allocate a DMA buffer, it expects that the buffer will be addressable by the adapter. That will not be the case when 386MAX allocates the DMA buffer above 16 MB.

Again, the scenario may be exotic enough that it could have slipped through the cracks. Owners of EISA systems would be likely to use EISA storage and network adapters, not ISA. Likewise PCI machines would presumably utilize PCI storage and network adapters. Thus the problem would not be visible.

At the same time the system would actually need to have more than 16 MB installed for the problem to show up. That was quite uncommon in the days of 386MAX, let’s say 1995 and earlier. Machines with more RAM did exist, but were vastly more likely to run NetWare, OS/2, Windows NT, or some UNIX variant. At the same time, a PCI machine with 32 MB RAM or more and the older SIO or SIO.A southbridge would actually work, at least for floppy transfers, with the DMA buffer anywhere in memory.

It should be noted that the VDS specifications does not explicitly take this situation into account. It mentions that the VDS scatter/gather lock function may return memory not addressable by DMA, and in that case drivers are supposed to use the dedicated DMA buffer. The DMA buffer is assumed to be always addressable through DMA, but no mention is made of the 16 MB limit on ISA systems. Given that the VDS specification was initially written in 1990 and last updated in 1992, it is entirely plausible that machines with more than 16 MB RAM were not high on the spec writers’ priority list.

Workarounds?

386MAX has a NOEISADMA option which forces the DMA buffer to be allocated below 16 MB. This option does not appear to be well documented, but finding was not difficult when reviewing the 386MAX source code. This at least partially solves the problem.

Of course a much better solution would be fixing 386MAX to not assume EISA style DMA on PCI systems. Although the assumption was true on early PCI systems, it is not true in general.

But Wait, There’s More!

Later I realized that there’s another factor in the whole mess, the NOWIN3 keyword. The NOWIN3 keyword removes support for Windows 3.x, thus saving memory, and also makes it unnecessary to have 386MAX.VXD in the same directory as 386MAX.SYS (useful when booting from a floppy).

But… of course… the NOWIN3 keyword does more than that. And that’s where the well commented 386MAX source code was really helpful. By default, when 386MAX supports Windows 3.x, the memory manager makes sure that UMB memory is physically below 16 MB. A comment says:

; If we're providing Win3 support, ensure that the PTEs

; we're to use to high DOS memory are below 16MB physical memory

; If there's possible DMA target mapped into high DOS, Windows

; won't check the address and fails.

Aha… so by default, UMBs are below 16 MB, and hence within reach of a PC/AT compatible DMA controller. With the NOWIN3 keyword, the restriction is removed and the UMB physical memory can end up above 16 MB.

When loading drivers into UMBs, 386MAX probably does not use the dedicated DMA buffer because the memory is contiguous and (it believes) addressable by the DMA controller.

By default, that is actually true. I managed to create a configuration (NOWIN3 keyword, PCI system incorrectly identified as EISA DMA capable, loading drivers from floppy to UMBs) where DMA goes wrong.

Now it’s clearer why the problem went unnoticed. Let’s recap the conditions necessary to trigger the bug:

- Machine with more than 16 MB RAM (common now, not in early to mid-1990s)

- Machine with a 1996 or later PCI chipset (incorrectly assumed to be EISA DMA capable)

- 386MAX providing high memory aka UMBs (typical)

- DOS using UMBs (common)

- Loading drivers into UMBs via DEVICEHIGH/LOADHIGH (common)

- 386MAX Windows 3.x support disabled via NOWIN3 (very uncommon?)

- Booting from floppy (not common)

This scenario, although easy to reproduce, is just exotic enough that it’s entirely plausible that it wouldn’t have been encountered when 386MAX was popular.

In July 1990, Microsoft released a specification for Virtual DMA Services, or VDS. This happened soon after the release of Windows 3.0, one of the first (though not the first) providers of VDS. The VDS specification was designed for writers of real-mode driver code for devices which used DMA, especially bus-master DMA.

Why Bother?

Let’s start with some background information explaining why VDS was necessary and unavoidable.

In the days of PCs, XTs, and ATs, life was simple. In real mode, there was a given, immutable relationship between CPU addresses (16-bit segment plus 16-bit offset) and hardware visible (20-bit or 24-bit) physical addresses. Any address could be trivially converted between a segment:offset format and a physical address.

When the 386 came along, things got complicated. When paging was enabled, the CPU’s memory-management unit (MMU) inserted a layer of translation between “virtual” and physical addresses. Because paging could be (and often was) enabled in environments that ran existing real-mode code in the 386’s virtual-8086 (V86) mode, writers of V86-mode control software had to contend with a nasty problem related to DMA, caused by the fact that any existing real-mode driver software (which notably included the system’s BIOS) had no idea about paging.

The first and most obvious “problem child” was the floppy. The PC’s floppy drive subsystem uses DMA; when the BIOS programs the DMA controller, it uses the real-mode segmented 16:16 address to calculate the corresponding physical address, and uses that to program the DMA page register and the 8237 DMA controller itself.

That works perfectly well… until software tries to perform any floppy reads or writes to or from paged memory. In the simplest case of an EMM386 style memory manager (no multiple virtual DOS machines), the problem strikes when floppy reads and writes target UMBs or the EMS page frame. In both cases, DOS/BIOS would usually work with a segmented address somewhere between 640K and 1M, but the 386’s paging unit translates this address to a location somewhere in extended memory, above 1 MB.

The floppy driver code in the BIOS does not and can not have any idea about this, and sets up the transfer to an address between 640K and 1M, where there often isn’t any memory at all. Floppy reads and writes are not going to work.

DMA Controller Virtualization

For floppy access, V86 mode control software (EMM386, DesqView, Windows/386, etc.) took advantage of the ability to intercept port I/O. The V86 control software intercepts some or all accesses to the DMA controller. When it detects an attempt (by the DMA controller) to access paged memory where the real-mode address does not directly correspond to a physical address, the control software needs to do extra work. In some cases, it is possible to simply change the address programmed into the DMA controller.

In other cases it’s not. The paged memory may not be physically contiguous. That is, real-mode software might be working with a 16 KB buffer, but the buffer could be stored in five 4K pages that aren’t located next to each other in physical memory.

That’s something the PC DMA controller simply can’t deal with—it can only transfer to or from contiguous physical memory. And there are other potential problems. The memory could be above 16 MB, not addressable by the PC/AT memory controller at all. Or it might be contiguous but straddle a 64K boundary, which is another thing the standard PC DMA controller can’t handle.

In such cases, the V86 control software must allocate a separate, contiguous buffer in physical memory that is fully addressable by the PC DMA controller. DMA reads must be directed into the buffer, and subsequently copied to the “real” memory which was the target of the read. For writes, memory contents must be first copied to the DMA buffer and then written to the device.

All this can be done more or less transparently by the V86 control software, at least for standard devices like the floppy.

DMA Bus Mastering

The V86 control software is helpless in the face of bus-mastering storage or network controllers. There is no standardized hardware interface, and often no I/O ports to intercept, either. Bus mastering hardware does not utilize the PC DMA controller and has its own built in DMA controller.

While bus mastering became very common with EISA and PCI, it had been around since the PC/AT, and numerous ISA based bus-mastering controllers did exist.

This became a significant problem circa 1989. Not only were there several existing bus-mastering ISA SCSI HBAs on the market available as options (notably the Adaptec 1540 series), but major OEMs including IBM and Compaq were starting to ship high-end machines with a bus-mastering SCSI HBA (often MCA or EISA) as a standard option.

The V86 control software had no mechanism to intercept and “fix up” bus-mastering DMA transfers. Storage controllers were especially critical, because chances were high that without some kind of intervention, software like Windows/386 or Windows 3.0 in 386 Enhanced mode wouldn’t even load, let alone work.

The first workaround was to use double buffering. Some piece of software, often a disk cache, would allocate a buffer in conventional memory (below 640K) and all disk accesses were funneled through that buffer. This technique was often called double-buffering.

It was also far from ideal. Double-buffering reduced the performance of expensive, supposedly best and fastest storage controllers. And it ate precious conventional memory.

The Windows/386 Solution

Microsoft’s Windows/386 had to contend with all these problems. The optimal Win/386 solution was a native virtual driver, or VxD, which would interface with the hardware.

Due to lack of surviving documentation, it’s difficult to say exactly what services Windows/386 version 2.x offered. But we know exactly what Windows 3.0 offered when operating in 386 Enhanced mode.

Windows 3.0 came with the Virtual DMA Device aka VDMAD. This VxD virtualizes the 8237 DMA controller, but also offers several services intended to be used by drivers of bus-mastering DMA controllers.

For the worst case scenario which requires double buffering, VDMAD has a contiguous DMA buffer; this buffer can be requested using the VDMAD_Request_Buffer and returned with VDMAD_Release_Buffer. While the VDMAD API could handle multiple buffers, Windows 3.x in reality only had one buffer. The buffer is in memory that is not necessarily directly addressable by the callers. The VDMAD_Copy_From_Buffer and VDMAD_Copy_To_Buffer APIs take care of this.

In some cases, double buffering is not needed. The VDMAD_Lock_DMA_Region (and the corresponding VDMAD_Unlock_DMA_Region) API can be used if the target memory is contiguous and accessible by the DMA controller. The OS will lock the memory, which means the physical underlying memory can’t be moved or paged out until it’s unlocked again. This is obviously necessary in a multi-tasking OS, because the target memory must remain in place until a DMA transfer is completed.

In the ideal scenario, a bus-mastering DMA controller supports scatter-gather. That is, the device itself can accept a list of memory descriptors, each with a physical memory address and corresponding length. Thus a buffer can be “scattered” in physical memory and “gathered” by the controller into a single entity. DMA controllers with scatter-gather are ideally suited for operating systems using paging. With scatter-gather, there is no need for double-buffering or any other workarounds.

The VDMAD_Scatter_Lock API takes the address of a memory buffer, locks its pages in memory, and fills in an “Extended DMA Descriptor Structure” (Extended DDS, or EDDS) with a list of physical addresses and lengths. The list from the EDDS is then supplied to the bus-mastering hardware. The VDMAD_Scatter_Unlock API unlocks the buffer once the DMA transfer is completed.

When it is available, using scatter/gather does not require any additional buffers and avoids extraneous copying. It takes full advantage of bus-mastering hardware. All modern DMA controllers (storage, networking, USB, audio, etc.) use scatter-gather, and all modern operating systems offer similar functionality to lock a memory region and return a list of corresponding physical addresses and lengths.

The VDMAD VxD also offers services to disable or re-enable default translation for standard 8237 DMA channels, and a couple of other minor services.

VDS, or Virtual DMA Services

Why the long detour into the details of a Windows VxD? Because in Windows 3.x, VDS is nothing more than a relatively thin wrapper around the VDMAD APIs. In fact VDS is implemented by the VDMAD VxD (the Windows 3.1 source code is in the Windows 3.1 DDK; unfortunately the Windows 3.0 DDK has not yet been recovered).

VDS offers the following major services:

- Lock and unlock a DMA region

- Scatter/gather lock and unlock a region

- Request and release a DMA buffer

- Copy into and out of a DMA buffer

- Disable and enable DMA translation

These services obviously rather directly correspond to VDMAD APIs. VDS provides a small amount of added value though.

For example, the API to lock a DMA region can optionally rearrange memory pages to make the buffer physically contiguous, if it wasn’t already (needless to say, this may fail, and many VDS providers do not even implement this functionality). The API can likewise allocate a separate DMA buffer, optionally copy to it when locking, or optionally copy from the buffer when unlocking.

The VDS specification offers a list of possible DMA transfer scenarios, arranged from best to worst:

- Use scatter/gather. Of course, hardware must support this, and not all hardware does.

- Break up DMA requests into small pieces so that double-buffering is not required. This technique will help a lot, but won’t work in all cases (e.g. when the target buffer is not contiguous).

- Break up transfers and use the OS-provided buffer, which is at least 16K in size according to the VDS specification. This involves double-buffering and splitting larger transfers, hurting performance the most.

VDS Implementations

From the above it’s apparent that Windows 3.0 was likely the canonical VDS implementation. But it was far from the only one, and it wasn’t even the first one released. More or less any software using V86 mode and paging had to deal with the problem one way or another.

An instructive list can be found for example in the Adaptec ASW-1410 documentation, i.e. DOS drivers for the AHA-154x SCSI HBAs. The ASPI4DOS.SYS driver had the ability to provide double-buffering, with all the downsides. This was not required by newer software which provided VDS. The list included the following:

- Windows 3.0 (only relevant in 386 Enhanced mode)

- DOS 5.0 EMM386

- QEMM 5.0

- 386MAX 4.08

- Generally, protected mode software with VDS support

A similar list was offered by IBM, additionally including 386/VM.

It appears that Quarterdeck’s QEMM 5.0 may have been the first publicly available VDS implementation in January 1990. Note that QEMM 5.0 was released before Windows 3.0.

VDS was also implemented by OS/2. It wasn’t present in the initial OS/2 2.0 release but was added in OS/2 2.1.

Bugs

The VDS implementation in Windows 3.0 was rather buggy, and it’s obvious that at least some of the functionality was completely untested.

For example, the functions to copy to/from a DMA buffer (VDS functions 09h/0Ah) have a coding error which causes buffer size validation to spuriously fail more often than not; that is, the functions fail because they incorrectly determine that the destination buffer is too small when it’s really not. Additionally, the function to release a DMA buffer (VDS function 04h) fails to do so unless the flag to copy out of the buffer is also set.

There was of course a bit of a chicken and egg problem. VDS was to be used with real mode device drivers, none of which were supplied by Microsoft. It is likely that some of the VDS functionality in Windows 3.0 was tested with real devices prior to the release, but certainly not all of it.

VDS Users

In the Adaptec ASPI4DOS.SYS case, the driver utilizes VDS and takes over the INT 13h BIOS disk service for drives controlled by the HBA’s BIOS.

Newer Adaptec HBAs, such as the AHA-154xC and later, come with a BIOS which itself uses VDS. This poses an interesting issue because the BIOS must be prepared for VDS to come and go. That is not as unlikely as it might sound; for example on a system with just HIMEM.SYS loaded, there will be no VDS. If Windows 3.x in 386 Enhanced mode is started, VDS will be present and must be used, but when Windows terminates, VDS will be gone again.

This is not much of a problem for disk drivers; VDS presence can be checked before each disk transfer and VDS will be either used or not. It’s trickier for network drivers though. If a network driver is loaded when no VDS is present, it may set up receive buffers and program the hardware accordingly. For that reason, the VDS specification strongly suggests that VDS implementations should leave existing memory (e.g. conventional memory) in place, so that already-loaded drivers continue to work.

Not Just SCSI

Documentation for old software (such as Windows 3.0) often talks about “busmastering SCSI controllers” as if it was the only class of devices affected. That was never really true, but bus-mastering SCSI HBAs were by far the most widespread class of hardware affected by the problems with paging and DMA not playing along.

By 1990, the Adaptec 154x HBAs were already well established (the AHA-1540 was available since about 1987), and Adaptec was not the only vendor of bus-mastering SCSI HBAs.

There were also bus-mastering Ethernet adapters that started appearing in 1989-1990, such as ones based on the AMD LANCE or Intel 82586 controllers. Later PCI Ethernet adapters used almost exclusively bus mastering. Their network drivers for DOS accordingly utilized VDS.

VDS Documentation

Microsoft released the initial VDS documentation in July 1990 in a self-extracting archive aptly named VDS.EXE (as documented in KB article Q63937). After the release of Windows 3.1, Microsoft published an updated VDS specification in October 1992, cunningly disguised in a file called PW0519T.TXT; said file was also re-published as KB article Q93469.

IBM also published VDS documentation in the PS/2 BIOS Technical Reference, without ever referring to ‘VDS’. The IBM documentation is functionally identical to Microsoft’s, although it was clearly written independently. It is likely that IBM was an early VDS user in PS/2 machines equipped with bus-mastering SCSI controllers.

Original VDS documentation is helpfully archived here, among other places.

Conclusion

VDS was a hidden workhorse making bus-mastering DMA devices transparently work in DOS environments. It was driven by necessity, solving a problem that was initially obscure but circa 1989 increasingly more widespread. The interface was very similar to the API of Windows 3.0 VDMAD VxD, but VDS was implemented more or less by every 386 memory manager. It was used by loadable DOS drivers but also by the ROM BIOS of post-1990 adapters.